Pradipta Biswas’ lab works on designing systems and software that help people navigate the real world better

When Pradipta Biswas was diagnosed with myopia and started wearing thick glasses, he was just five years old. But the weight of the glasses did not crush his spirit; he went on to play cricket and practice shooting rifles as a hobby for many years.

“If you want to do something, you can still do it [despite challenges],” he says. “I like to transmit that message.”

An associate professor in the Department of Design and Manufacturing (DDM) at IISc, he continues to apply that message in his work. His research focuses on seamlessly integrating human thoughts and actions with the capabilities of computers and machines. He runs the Intelligent Inclusive Interaction Design (I3D) lab, dedicated to developing and improving human-machine interfaces.

Lending a helping hand

Pradipta’s interest in bridging the human-machine gap began with the idea of helping differently abled individuals perform simple tasks. As a Master’s student at IIT Kharagpur, Pradipta first started developing alternative and augmentative communication aids for children suffering from cerebral palsy. “I felt very happy,” he recalls. “I was working on natural language software that helped these children construct and speak grammatically correct sentences.”

After his Master’s, he received the prestigious Gates scholarship which helped him pursue a PhD with Peter Robinson at the University of Cambridge. There, he continued his work on building accessible and inclusive tools and systems for elderly people and people with impairments in vision or hearing. A mix of personal and professional reasons then brought him back to India in 2016, when he set up his lab at IISc.

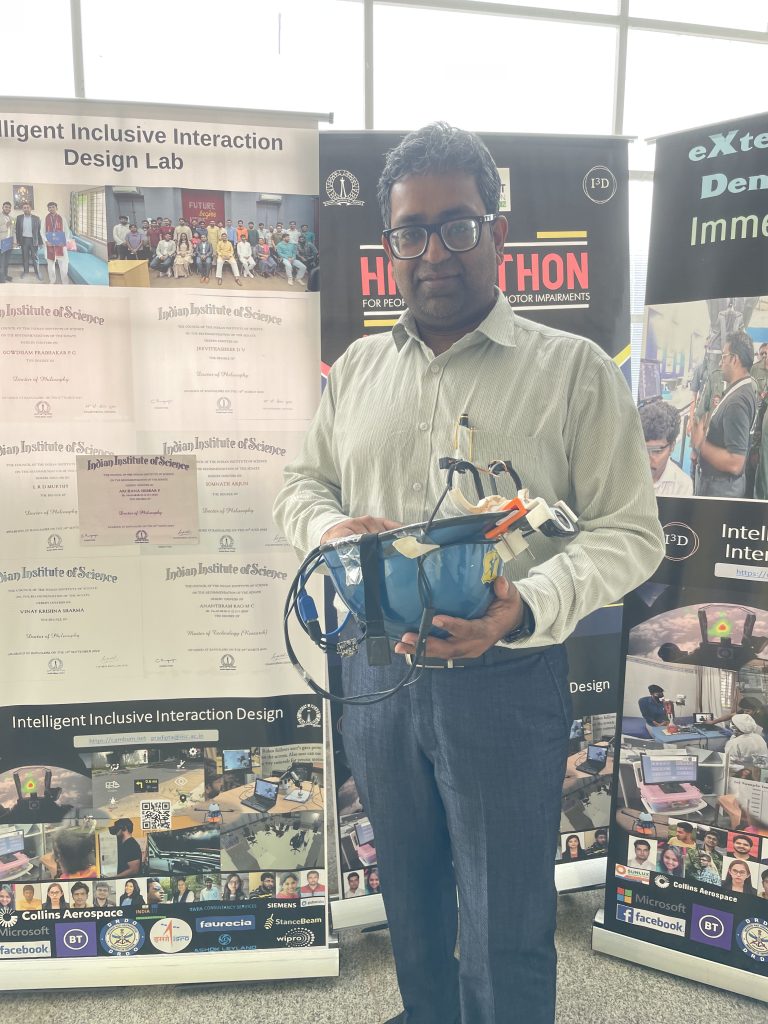

Pradipta’s lab is full of whirring gadgets. One of them is a robotic arm that casually picks up and drops objects, or stamps different signs on its target surface. Except that this arm is controlled by a human user simply moving their gaze over a computer screen. It is part of his ongoing research on helping adults and children with speech and motor impairments.

Coupling eye-tracking software with the robotic arm in an augmented reality setup allows people to control the arm with their gaze. This can help adults with movement disorders do their jobs more easily and help differently abled kids re-discover the simple joy of playing with toys.

“Everyone believes that people with cerebral palsy cannot do many things,” explains Pradipta. “But they can do many things, with appropriate interventions.”

Following the gaze

Apart from assistive tools, his lab has also been developing technology that could help drive cars more easily, fly aircraft better, and manoeuvre spaceships more effectively.

Since his PhD days, Pradipta has continued developing and refining new eye gaze-tracking technologies. Software that can follow the gaze of our eyes and use that information to control different systems can be deployed in many scenarios – from electronic interfaces inside the cockpit of a military aircraft to windshield displays in a moving car.

While driving a car, it is easier to keep our eyes ahead and our hands on the steering wheel. Pradipta’s team has developed an interactive head-up display that would appear on the windshield in front of the driver. This could help them do tasks like turn on the radio by just moving their eyes or pressing a button on the steering wheel.

The team has also developed and tested similar eye-gaze controlled interaction systems in military aircrafts, to help pilots not just toggle controls but also lock in better onto their targets. Both technologies were first tested in virtual car and aircraft simulators in the lab before being transferred to actual vehicles, and were found to improve the performance of users.

Their eye-gaze tracking technology can also help monitor combat pilots’ gaze during flights and estimate the cognitive load on their brains. Support crew on the ground keeping track of these can step in to intervene if the pilots are struggling with high cognitive loads, Pradipta explains. “When you are flying, if something goes wrong, then someone can take over control and bring the pilot and the plane to safety.”

When reality becomes virtual

Virtual Reality (VR) is another area of Pradipta’s interest. In 2019, the British Telecom India Research Center was launched as a collaboration between British Telecom (BT) and IISc. Under this collaboration, Pradipta’s team has created a VR-based digital twin of the BT office in Bellandur. The project was recently nominated as a finalist for the BT-sponsored National AI awards 2024, with the winner to be announced on 12 September in the UK.

Putting on a VR headset, one will immediately be transported virtually to the BT office, with little holographic people and objects around them. It’s like a video game, but not for playing – just observing. Using simple webcams in the BT office space, the team has simulated a VR replica of the office, not to track the people themselves but to monitor their use of the space. This has helped the team understand how to optimise the use of the office space – like the amount of power consumed and associated costs.

The team has also helped generate VR holograms of parts that need to be assembled to build components in factories. This means that instead of following an instruction manual or watching a 2D video, workers can get a 3D view of how to assemble the parts.

Ajith Krishnan, one of the four astronaut-designates for ISRO’s Gaganyaan mission, has also used VR technology to simulate the spaceflight experience, under Pradipta’s guidance. The former was interested in the different ways in which a spaceship can manually deorbit – return safely back to Earth in case of an emergency. For his MTech project with Pradipta, he and other members of the study – six trained military pilots and six civilians with gaming experience – used a custom-made VR space flight simulator. In a yet-to-be peer reviewed study, Ajith and Pradipta showed that a spacecraft with a bottom view – where the entire Earth is visible from below – is better for manual de-orbiting manoeuvres than a front view, where only a part of Earth’s horizon is visible.

A space to innovate

Pradipta’s work has covered a lot of virtual spaces, but finding enough physical space is, funnily enough, a constant struggle for him.

“A big challenge is having space [to do research],” he says. “I had a similar challenge in Cambridge as well. There was no fixed place for me to keep a TV for the technology I was developing, so, like Atlas, I used to carry around a 60-inch TV.” Providing enough lab and work space for the many students in his lab has been difficult, he says, adding that he wishes he had the room to accommodate more people in his group.

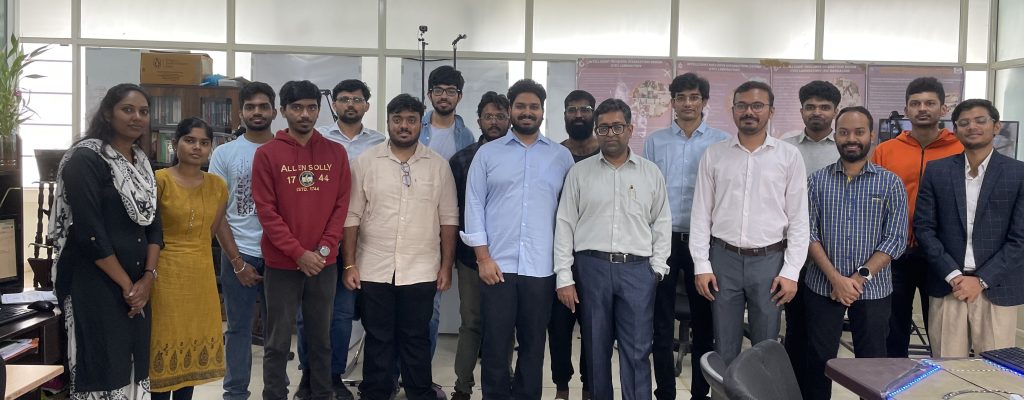

But space or no space, Pradipta says that he loves his job – his favourite part is spending time with students.

“When we meet in Sarvam or somewhere else, when we are all together, and we talk casually … that’s exactly when the innovation happens,” he said. “That’s the part I most enjoy … the casual settings.”

Inspired by how Google was set up in its early days, Pradipta says that he tries to maintain a horizontal architecture in his lab as well, instead of a vertical hierarchy of seniority, age or expertise. He is keen on creating an environment where people can talk to each other freely, and ideas flow back and forth.

”We listen to everyone,” he says. “Everyone has their own expertise, but when we combine things together, something new will work out.”