Soma Biswas’ lab develops algorithms to extract meaningful information from images and videos

During her Master’s degree at IIT Kanpur, Soma realised for the first time that she enjoyed research, problem solving and independent thinking. At the same time, she also mentored undergraduates as a Teaching Assistant and discovered her love for mentoring and working with students. “You’re not really teaching them … but you discuss with them and I enjoyed doing that.”

These experiences coupled with her fascination for computers led Soma to join the University of Maryland, College Park as a graduate student. Later, after working at the University of Notre Dame and GE Research, she finally joined the Department of Electrical Engineering at IISc as an Assistant Professor. Curiosity about what happens to all the images and videos captured by surveillance cameras led her to the field of Computer Vision, and research on face recognition and surveillance technologies. She was fascinated, she says, “by how a simple camera placed somewhere can essentially do something that an expert [in the job] can.”

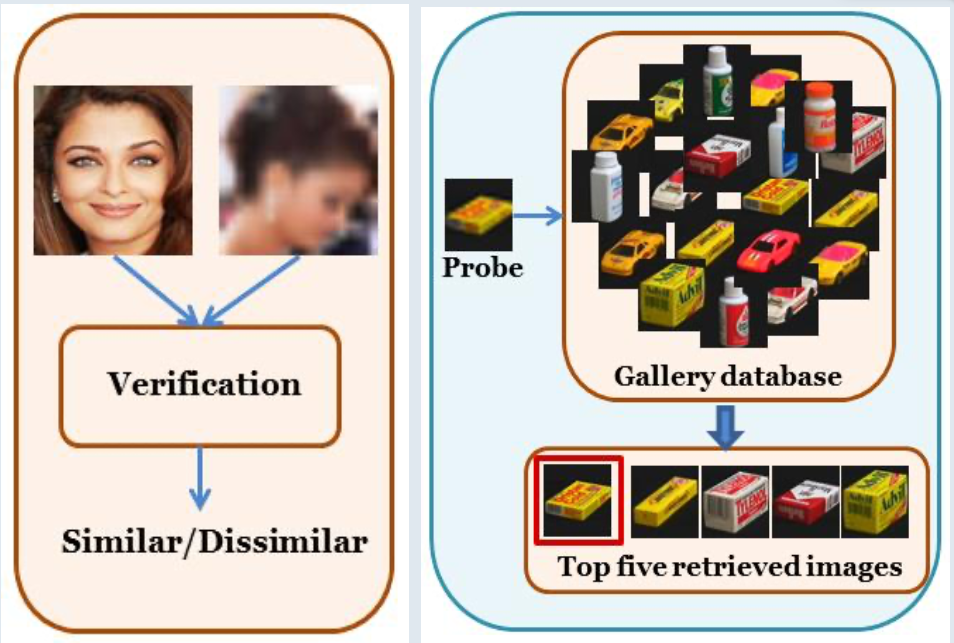

Computer vision deals with developing algorithms and artificial intelligent systems that can derive meaningful information from images, videos, and other visual data. With the recent development of Deep Learning approaches, there has been a boom in the research and development of practical applications of computer vision. We now have algorithms and software that can recognise human handwriting, detect objects, describe images, create tags, and much more.

A deep learning algorithm or model “learns” a lot like humans. Taking the example of object classification, when a child sees a bird for the first time, it asks its parent what that is. The parent says that it’s a bird. The next time a child sees a different bird, it again asks its parent, and this continues a few more times until the child has “understood” what a bird is and no longer asks its parent when it sees one. Next, when the child sees an airplane for the first time, it once again asks its parent. Soon, the child learns to classify things like birds and airplanes as flying objects. When a deep learning model “learns” something, the researcher acts as its parent, giving feedback on each image. The model incorporates the feedback so that it can classify similar images correctly next time. Repeating this loop over enough data produces a model that can work really well on unseen images of the same category which the researcher feeds to the model.

Deep learning has been a game-changer in the field of computer vision. Given enough data, these models can be trained to learn and perform virtually any task. However, getting these voluminous amounts of data is not always possible. The primary focus of Soma’s lab at IISc has been to develop algorithms that can learn using minimal data. One such direction is development of zero-shot or few-shot algorithms, which, after training, require none or only a few labeled examples of each new domain/class to recognise them seamlessly.

Another related problem that the lab works on is domain adaptation. As humans, we can recognise a given object day or night, or even across seasons. But to a computer, every image is just a bunch of pixel values and recognising the same object in different ambient conditions (different lighting or weather conditions or at different times of the day or year) is a great challenge. “You can’t really keep on capturing data and then training [the model] for all possible environmental conditions. So you would want your algorithms to generalise to different conditions or domains,” Soma explains. In an ongoing project on domain adaptation, the lab is trying to generalise an existing algorithm to determine whether a manufactured part in an automobile manufacturing plant is defective from just scanning its image. This generalisation is necessary as the conditions across different manufacturing plants of the same company are not uniform, giving rise to images taken in different conditions – different lighting or camera angles, for example.

Apart from the variety of conditions, there are also different kinds of modalities that a computer has to deal with. According to Soma, recent work from her lab on Cross-Modal Retrieval has made quite a stir in the community. This line of work bridges the gap between the representations of the two modalities and generalises well to other unseen data classes. These approaches can be deployed in various practical applications such as searching for products on e-commerce platforms. The lab has addressed several challenging and lesser explored directions, such as scenarios where the available labels are noisy and where several of the training data do not have annotations. Along the same lines, the lab has also worked on the problem of sketch-based image retrieval, where relevant images can be retrieved using just a rough hand-drawn sketch query. This is especially useful nowadays because of the proliferation of touchscreen devices. Based on the above principles and models, the lab has taken up a new project to detect fake news using data from multiple modalities.

Images courtesy: Soma Biswas

The lab also works on novelty detection and continual learning. In order for algorithms to successfully work after deployment, they need to clearly understand what they know and do not know. When most existing algorithms encounter the image of a new object, they tend to wrongly classify it as something they know. “We need algorithms that can detect if something is new,” Soma points out. The model must first identify new information before incorporating it, to increase its accuracy. “Learning never stops; as humans, we don’t stop at a certain age when we see something new,” she says. Incorporating newfound knowledge and continually increasing the knowledge base of models is another interesting direction that the lab is working on.

In future, Soma wants to work on medical and satellite data, as the data-efficient models that she is currently developing will most likely be useful in such fields where collecting annotated data is really difficult. She also talks about how most of the algorithms out there are practically black boxes. Thus, it is difficult to understand when they work and when they suddenly fail. Developing AI solutions that can give understandable explanations for their output is something that is required in the field of medical sciences, as important decisions that are taken based on AI have to be well defended. She aims to develop algorithms that are, above all, reliable and explainable. Then, anyone working with such an algorithm can have the confidence to trust its predictions and in cases where the algorithm is unsure, it will let the user know.

Soma, now Associate Professor, says that the field is ever-changing and rapidly growing. She mentions that it is very important for a researcher to work on something they are passionate about. “Research has its ups and downs; you can’t work for a particular paper or recognition. You have to work and enjoy your work just for the sake of it. Everything else comes as a byproduct of it.”