Two new studies from the Centre for Neuroscience (CNS), Indian Institute of Science (IISc) explore how closely attention and eye movements are linked, and unveil how the brain coordinates the two processes.

Attention is a unique phenomenon that allows us to focus on a specific object in our visual world, and ignore distractions. When we pay attention to an object, we tend to gaze towards it. Therefore, scientists have long suspected that attention is tightly coupled to rapid eye movements, called saccades. In fact, even before our eyes move towards an object, our attention focuses on it, allowing us to perceive it more clearly – a well-known phenomenon called pre-saccadic attention.

However, in a new study published in PLOS Biology, the researchers at CNS show that this perceptual advantage is lost when the object changes suddenly, a split second before our gaze falls upon it, making it harder for us to process what changed.

“Our study provides an interesting counterpoint to many previous studies which suggested that pre-saccadic attention is always beneficial,” explains Devarajan Sridharan, Associate Professor at CNS and corresponding author of the study.

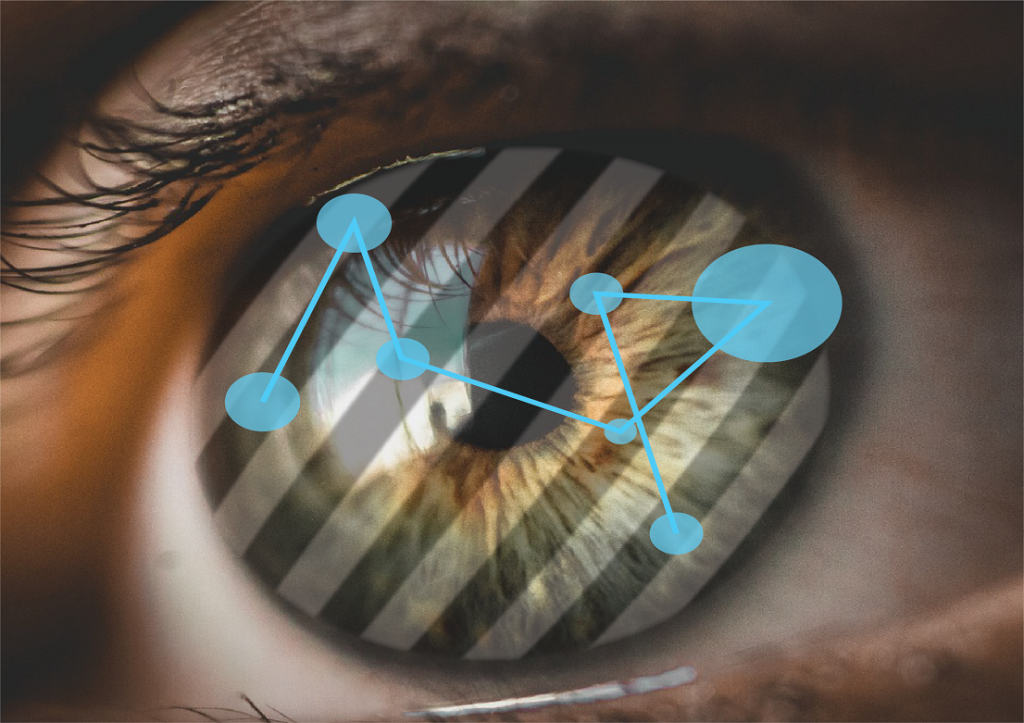

In the PLOS Biology study, Priyanka Gupta, a PhD student in Sridharan’s lab, trained human volunteers to covertly monitor gratings (line patterns) presented on a screen, without directly looking at them, and to report when one tilted slightly. “Importantly, the participants did this task just before their eyes moved, in the pre-saccadic window. So, we were able to study the relationship between pre-saccadic attention and the detection of changes in the visual environment,” explains Gupta. A tracker was used to monitor their eye movements before, during and after their gaze fell on the object. “To our surprise, participants found it harder to detect the changes in the pre-saccadic window,” Gupta adds.

In a follow-up experiment, they made the participants monitor two gratings presented one after the other quickly, again, just before their eyes moved. What the team found was that if the orientation of the second grating suddenly changed during this time, the participants tended to mix up the orientations of the two gratings – explaining the loss of the attentional advantage.

“This is essentially a basic science study,” says Sridharan. But such insights, he adds, can be useful for how we track multiple objects in rapidly changing environments – in driving or flight simulators, for example.

In the other study published in Science Advances, carried out with collaborators at Stanford University, the researchers used an unusual experiment – this time, to decouple attention from eye movements – in monkeys. Their goal was to tease out what is happening in the brain while these processes play out.

The monkeys had been trained on a counter-intuitive task called an “anti-saccade” task. Like the human study, the monkeys covertly monitored several gratings on a computer screen without directly looking at them. But when any one grating tilted slightly, the monkeys had to look away from it instead of focusing more sharply on it. This helped the researchers delink the location of the monkey’s attention, from the location where its gaze ultimately fell.

Using a special kind of electrode called a “U-probe”, they also recorded signals from hundreds of neurons across different layers of a specific region in the monkey’s brain called the visual cortex area V4. What they found was that neurons in the more superficial layers of the cortex generated attention signals, while neurons in deeper layers produced eye movement signals.

Interestingly, these neurons also showed different activity patterns. “The superficial neurons increased their firing rates, to signal the object that needs to be attended to and prioritised for decision-making,” says Adithya Narayan Chandrasekaran, first author of the Science Advances study and a former research assistant in Sridharan’s lab at CNS. On the other hand, the deep neurons were tuning down their “noise”, possibly to allow the animal to perceive the object better.

The researchers believe that uncovering such brain signatures can eventually point to what fails in attention disorders. Sridharan says, “Discovering such mechanisms is vital for developing therapies for disorders like ADHD.”